Technical Architecture: Difference between revisions

OwenWilliams (talk | contribs) mNo edit summary |

OwenWilliams (talk | contribs) mNo edit summary |

||

| Line 18: | Line 18: | ||

=== Data Import === | === Data Import === | ||

The original roadmap envisioned a system that could be configured to request metadata directly from external systems, process that data from its export format into the DAP's internal data format, and validate that required fields & data structures requried for that DAP content type to pass validation. During our prototyping we investigated the ETL operations required | The original roadmap envisioned a system that could be configured to request metadata directly from external systems, process that data from its export format into the DAP's internal data format, and validate that required fields & data structures requried for that DAP content type to pass validation. During our prototyping we investigated the ETL operations required to import data exported from Ex Libris Voyager and the Luna Imaging server. | ||

This process helped identify the requirements and functionality needed for the general content import process pipeline, but also led us to modify the technical approach to focus on importing a more standardized JSON import format. This approach allows many more systems to have their data imported into the DAP without technical additions or developments needing to be deployed to the core DAP system, and helps establish a JSON data standard for various cultural institution content types. | This process helped identify the requirements and functionality needed for the general content import process pipeline, but also led us to modify the technical approach to focus on importing a more standardized JSON import format. This approach allows many more systems to have their data imported into the DAP without technical additions or developments needing to be deployed to the core DAP system, and helps establish a JSON data standard for various cultural institution content types. | ||

Revision as of 12:13, 2 January 2018

Platform Goals & Desired Outcomes

- Modular / component based design that allows for the system to evolve over time

- A system designed to plug in or take advantage of community services, software, and processes.

- Flexibility to add, remove, and modify a diverse set of content types

- Ability to easily improve and iterate on the user interface

- Provide machine access to Folger data holdings to the public

- Develop a platform with low cost of entry for other institutions to adopt.

Logical Architecture

Platform Components

Automated Data Import & Mapping Services

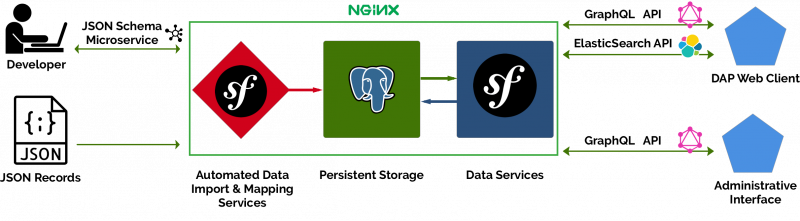

The Automated data import and mapping services are made up of three major sub-components. These are a JSON importing web interface, a flexible data validation service written in Symfony, and a generalized system for capturing and storing binary assets that leverages PostgreSQL's ability to act as both a relational database and a NoSQL / document storage repository.

Data Import

The original roadmap envisioned a system that could be configured to request metadata directly from external systems, process that data from its export format into the DAP's internal data format, and validate that required fields & data structures requried for that DAP content type to pass validation. During our prototyping we investigated the ETL operations required to import data exported from Ex Libris Voyager and the Luna Imaging server.

This process helped identify the requirements and functionality needed for the general content import process pipeline, but also led us to modify the technical approach to focus on importing a more standardized JSON import format. This approach allows many more systems to have their data imported into the DAP without technical additions or developments needing to be deployed to the core DAP system, and helps establish a JSON data standard for various cultural institution content types.

Content Types

Different sorts of content can be configured within the DAP. Each content type is defined within the system by a JSON schema that identifies required fields, nullable fields, and field names for that content type. The system has been designed to allow for additional fields that aren't part of this schema to be included in DAP records. These additional fields will be exposed and queriable via the API but typically will not be levarged by the search client or other implementations that aren't aware of these extra fields.

Content Types Hierarchy

While it is possible for the DAP to be configured with unique JSON schemas for each content type, it is also possible to leverage a more generalized schema for a collection of content types. Within the DAP configuration a hierarchy of content types can be defined, with each level of the hiearchy having a default schema that can be used if a child content type does not specify a validation schema. This allows for a variety of logical content types to be specified in the system that can share field sets. For example if you wanted to store both press releases and news announcments within the DAP and in fact each of those content types had the same fields or you created a content type with a superset of fields, you could create an "article" schema and then define both News and Press Releases to be children of article.

Default Content Type (Fallback Schema)

|

|---|

Schema Service

To help publicize what content types have been configured within the DAP, the system includes a micro-service api that can announce what schemas are available and provide the schema that will be used to validate a particular content type. (Using the example above for instance querying the service for press releases content type would return the articles schema, querying the service for the articles content type would return the articles schema, and querying the system for an unconfigured content type such as "picture" would return the fallback schema.